The term Privacy by Design (PbD) has been defined as a development method for privacy-friendly systems and services. It became a legal requirement for data controllers in the EU General Data Protection Regulation (GDPR) in 2018. PbD goes beyond mere technical solutions, addressing organizational procedures and business models as well. Considering privacy at both the design phase and from the beginning of the development process is essential for the successful application of privacy mechanisms throughout the lifecycle of a system or service.

Introduction

The European Convention on Human Rights of the Council of Europe of 1950 acknowledged privacy in its Article 8. This states that “Everyone has the right to respect for his private and family life, his home and his correspondence”. Privacy and Data Protection are also safeguarded by the Charter of the Fundamental Rights of the European Union from the year 2000.

In our current networked society, however, we cannot rely solely on laws for effectively protecting privacy as a fundamental human right. There are constant reports about breaches of personal data by governments or businesses, including misuse on a large scale. A case in point is NSA’s mass surveillance, as Edward Snowden revealed, or the Facebook-Cambridge Analytica data scandal. Our society has become increasingly digitized and connected with seemingly invisible personal data collection through smart devices and Artificial Intelligence-based data analytics that make it possible to derive highly sensitive personal information. Privacy as a fundamental right is therefore in jeopardy.

Privacy measures that have been developed over the last decades can be built into the design of systems and services for ensuring technical compliance with legal privacy principles. This can prevent data misuse, and thereby enhance user trust. These measures include anonymization and pseudonymization technologies for enforcing the principle of data minimization: This limits the extent of data collection and processing to what is strictly necessary (in relation to the purposes for which the data are processed), therefore providing the most efficient strategy for data protection: The fewer personal data are collected, the fewer can be breached. Furthermore, if users act anonymously, no personal data are collected at all, thus avoiding privacy issues.

The term Privacy by Design (PbD) – or, more precisely, its variant Data Protection by Design – has been defined as a development method for privacy-friendly systems and services. It became a legal requirement for data controllers in the EU General Data Protection Regulation (GDPR) in 2018. PbD goes beyond mere technical solutions, addressing organizational procedures and business models as well [1]. Considering privacy at both the design phase and from the beginning of the development process is essential for the successful application of privacy mechanisms throughout the lifecycle of a system or service.

Historical perspective

While the Dutch Data Protection Commissioner and the Privacy and Data Commissioner of Ontario coined the term “Privacy-enhancing Technologies” (PETs) in a report in 1995, research and development of PETs had already started more than 40 years ago: David Chaum developed the most fundamental PETs back in the 1980s. These included cryptographic protocols for anonymous payment schemes or communication; for instance, Mix Nets (the “conceptual ancestor” of today’s Tor network) routes pre-encrypted messages over a series of proxy servers, effectively hiding the identity of the person who is communicating with the client. In 1981, i.e. almost 15 years before the Internet was commercialized and broadly used, Chaum already envisioned the need for privacy and anonymity of communication: “Another cryptographic problem, "the traffic analysis problem" (the problem of keeping confidential who converses with whom, and when they converse), will become increasingly important with the growth of electronic mail.”.

In the late 1990s, Ann Cavoukian, the former Information and Privacy Commissioner of Ontario, coined the term Privacy by Design, which she defined through seven fundamental principles that provide an approach for considering privacy from the very beginning of and throughout the entire system development process [2].

The GDPR that took up the privacy by design approach, stipulating Data Protection by Design and Default (Art. 25) in 2018, acknowledges its central role in protecting an individual’s privacy.

Technical perspective

As Cavoukian’s fundamental privacy by design principles still “remain vague and leave many open questions about their application when engineering systems” [3], privacy by engineering has emerged as a field which provides methodologies, techniques and tools to engineer systems in line with the PbD principles. In particular, the Privacy Impact Assessment (PIA) is an important methodology for privacy engineering that can assist organizations in identifying, managing and mitigating privacy risks.

Starting from the data minimization principle (which can be achieved by the “unlinkability” of data and individuals) is a basic first step toward engineering systems in line with PbD principles. In addition, Art. 25 (I) GDPR also emphasizes pseudonymization as an example of an appropriate technical and organizational measure designed to implement data-protection principles, such as that of data minimization.

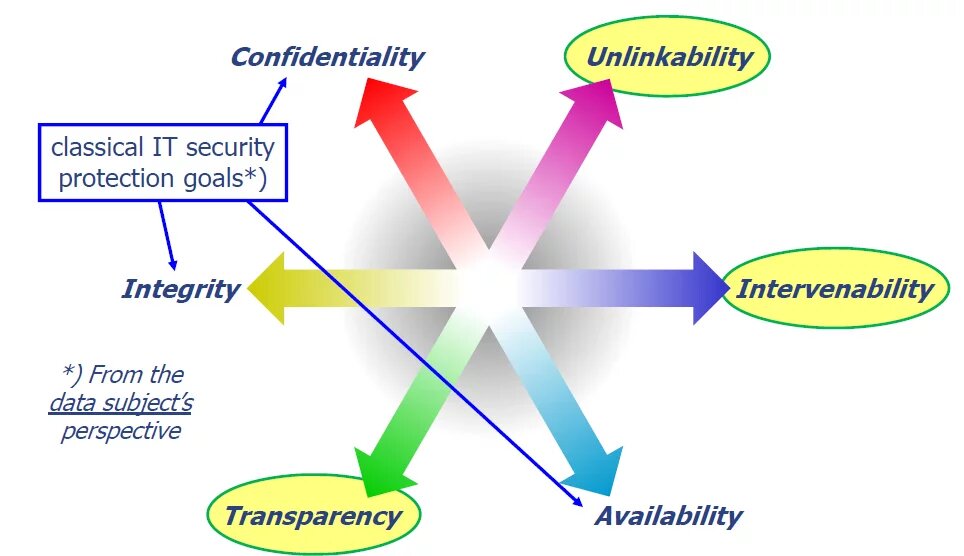

In addition to data minimization, the GDPR’s other basic privacy principles need to be enforced: These include fairness and transparency of data processing for the individuals concerned (data subjects), intervenability by granting individuals the right to control their personal data and to intervene against data processing, as well as the classic security principles of data confidentiality, integrity and availability. These privacy protection goals may also contradict each other, as Figure 1 illustrates; solving such issues may be challenging. For example, transparency for patients as to who has accessed their Electronic Health Records (EHRs) may be achieved by keeping a log of all instances of access. However, such logging creates additional data with regard to patients and health care personnel, thereby conflicting with the goal of data minimization. Furthermore, these log data may be highly sensitive; e.g. the fact that a psychiatrist has accessed someone’s EHR.

Figure 1: Data Protection Goals (including the classical IT security goals) that can also conflict with each other (Marit Hansen, Meiko Jensen and Martin Rost, 2015) (ULD, 2015).

Socio-political perspective

PbD is an important enabler for making data processing legally (GDPR) compliant, privacy-friendly, socially acceptable and trusted by the individuals concerned. Failure to comply with privacy by design can cause severe privacy problems that will be difficult to address later.

We see an example in the Dutch government’s unsuccessful project for rolling out extensive smart metering to households in the Netherlands in 2008. It had to be postponed because of consumers’ widespread protests about their privacy, and legal complaints. As smart metering measurements about real-time electricity consumption in households may leak sensitive information about the lifestyle of their members (whether someone is at home, cooking, using the coffee machine at night, or even which TV channels they watch), consumers saw their privacy as endangered. One lesson learnt was that following an alternative PbD approach based on PETs for obfuscating and anonymizing smart metering, privacy concerns could be adequately addressed while ultimately achieving legal compliance and creating consumer trust.

Another example of the ways in which PbD and PETs can be enablers is evident in the outcome of the recent Schrems II decision by the European Court of Justice from July 2020. This states that contractual tools alone may not be sufficient to legitimize the data transfer between the EU and U.S. in compliance with the GDPR. In reaction to this decision, in November 2020 the European Data Protection Board (EDPB), which oversees the enforcement of the GDPR, proposed measures including PETs that will supplement contractual clauses for achieving GDPR compliance for such cross-border data transfers. As a result, supplementary PET measures may serve particularly as enablers for the use of non-European cloud servers.

What is happening now?

In October 2020, the EDPB adopted general guidelines regarding the requirements of PbD for data controllers, i.e. the entities that determine the purposes and means of processing personal data. These guidelines provide recommendations for controllers, processors and producers in cooperating to achieve PbD [4]. In parallel, the European Union Agency for Cybersecurity (ENISA) has been elaborating technical guidelines for achieving PbD via pseudonymization and other privacy enhancing techniques.

Main stakeholders

Pursuant to Art. 25 GDPR, the controller has a legal obligation to implement PbD for protecting the rights of data subjects. However, even if not directly mentioned in Art. 25, other actors also have responsibilities (see Figure 2 for more details): Producers are supposed to help create products and services that implement PbD for helping controllers to fulfill their obligations. Therefore, the GDPR also suggests that PbD should be considered in the context of public tenders. Since PbD remains an obligation for the controller when data processing is outsourced, they need to select carefully the data processors to be contracted for fulfilling the PbD obligations. This should also be reflected by a data processing agreement between the two parties. Other important actors are the data protection authorities that monitor data controllers for compliance with the PbD obligation and can issue administrative fines in cases of non-compliance.

Figure 2: Main stakeholders for PbD and their GDPR roles

What happens next?

Ann Cavoukian emphasizes that the PbD Principle “Respect for Privacy” extends to the need for user interface to be “human-centered, user-centric and user-friendly, so that informed privacy decision may be reliably exercised” [2]. Designing usable privacy mechanisms remains a challenge, however, especially as it is often only a secondary goal for users.

Researchers and industry are already paying much attention to PbD for machine learning, providing algorithmic transparency including explainability, decision making, anonymity or pseudonymity of users who have contributed their data for analytics, and algorithmic fairness in avoiding bias and discrimination. Since transparency and fairness of machine learning can conflict with the goal of data minimization, solving such privacy tradeoffs can pose challenges that will require interdisciplinary expertise.

Further reading

- Danezis, G., Domingo-Ferrer, J., Hansen, M., Hoepman, J.-H., Le Metayer, D., Tirtea, R., Schiffner, S. "Privacy and Data Protection by Design – from policy to engineering". In: European Union Agency for Network and Information Security (ENISA) report, December 2014, ISBN 978-92-9204-108-3.

- Cavoukian, Ann. Privacy by design: The 7 foundational principles. Information and privacy commissioner of Ontario, Canada 5 (2009): 12.

- Gürses, Seda, Carmela Troncoso, and Claudia Diaz. Engineering privacy by design. Computers, Privacy & Data Protection 14.3 (2011): 25.

- European Data Protection Board. Guidelines 4/2019 on Article 25 Data Protection by Design and by Default, adopted on 13 November 2019.

- Hansen, Marit, Jensen, Meiko and Rost, Martin. Protection goals for privacy engineering. Proceedings of the 2015 IEEE Security and Privacy Workshops. IEEE, 2015.

- Hoepman, Jaap-Henk. Privacy Is Hard and Seven Other Myths. Achieving Privacy through Careful Design. Cambridge, MA: MIT Press. October 2021.

- 32nd International Conference of Data Protection and Privacy Commissioners, Jerusalem, Israel 27-29 October, 2010 Resolution on Privacy by Design

The opinions expressed in this text are solely that of the author/s and do not necessarily reflect the views of the Heinrich Böll Stiftung Tel Aviv and/or its partners.