Decades of social science research have shown that people do not always process information carefully. Instead, we are “cognitive misers” who prefer to reduce effortful reasoning by relying on simple mental structures called “heuristics.” This is true even in encounters with misinformation.

Concerns about misinformation on issues at the intersection of science and politics—as in the case of COVID-19—have recently been met with a flurry of interventions that attempt to help people separate truth from lies online. For example, Facebook has partnered with independent fact-checkers to implement a feature that flags posts as false and briefly hinders access to the content.

Elsewhere, we have noted that while interventions of this kind can be useful, their efficacy will be limited if we fail to complement them with solutions intended not only to correct deficits in knowledge of the truth, but also in motivations to accept that knowledge.

In response to this argument, some might assert that individuals whose beliefs cut against scientific consensus in favor of misinformation are either unreachable or exist in such small numbers that devising solutions for them is not worth the investment. We argue that this view is misguided for at least two reasons:

First: Small, motivated groups could propel misperceptions into the mainstream.

Decades of social science research have shown that people do not always process information carefully. Instead, we are “cognitive misers” who prefer to reduce effortful reasoning by relying on simple mental structures called “heuristics.” This is true even in encounters with misinformation.

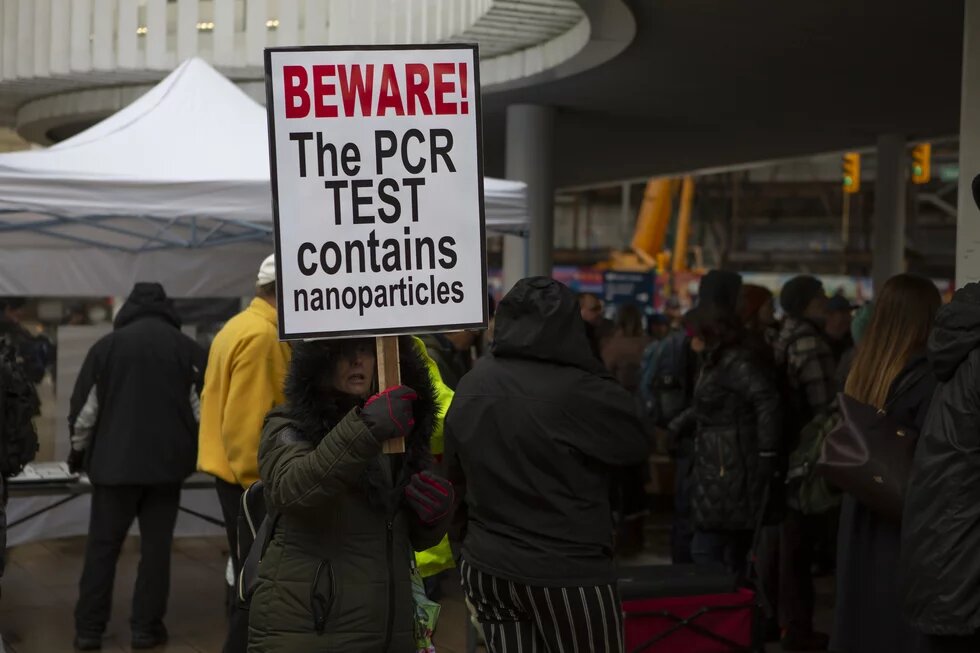

One heuristic people use is their perception of public opinion. Typically lacking empirical data, people rely on their “quasi-statistical sense” of public opinion, or an anecdote- and media-driven perception of which beliefs are most popular and acceptable. Loud groups of people who hold uncommon beliefs but who visibly assert them (e.g., in conversation or media coverage) can create the perception that minority beliefs are quite popular. Over time, a “spiraling” effect can occur, as people who think they hold a minority view (even if they do not) fall silent out of a desire to conform. In this way, minority opinions—including false beliefs—have the potential to appear normalized and mainstream.

This “spiral of silence” phenomenon suggests that it is risky to deprioritize interventions that are tailored to reach individuals who are both misinformed and passionately committed to their views, simply on the grounds that there are relatively few of them. Even if most people are not motivated to reject fact-checks or other information-based correctives and would, in theory, be receptive to a solution like that in Figure 1, motivation-oriented solutions remain a useful approach that could stem the spiralling of certain beliefs (like misperceptions) before they become mainstream in the first place.

Second: Failing to account for motivation may put us at risk of backfire effects, especially from fact-checkers.

By “backfire effects,” we mean that we risk pushing people to strengthen their false views, rather than to correct them. For example, in the context of vaccination—in one experimental study about the flu vaccine and another on the MMR vaccine— researchers found that participants most opposed to a vaccine prior to receiving a fact-based intervention about its efficacy became even less likely to say they would get the vaccine for themselves or their child. In the context of COVID-19, these findings suggest that corrective interventions on vaccines may backfire among people whose strongly held beliefs diverge from scientific consensus (e.g., those who may be misinformed).

However, it must be noted that backfire effects of this kind have been strongly debated, with one recent analysis arguing that much additional research is needed to confidently assert that such effects even exist, much less precisely how they work. Still, the lack of empirical clarity around how and when correctives might produce backfire effects (or not) should give us pause as we prioritize strategies to debunk falsehoods with facts.

Finally, it is worth recognizing that many efforts to correct misinformation must grapple both with individuals’ motivations to protect their beliefs about the topic at hand, as well as their motivations to reject information from specific sources. Misinformation about COVID-19 should thus be treated as a multi-layered risk communication problem. For example, in the United States, we know that more politically conservative individuals are particularly distrustful of fact-checkers and particularly skeptical about the severity of COVID-19, arguably raising the potential for fact-checking efforts to backfire as two motivational factors compound in favor of rejecting the intervention.

Accounting for motivation

Despite the above risks of neglecting motivation-based interventions, it can be appealing to prioritize knowledge-based interventions such as fact-checking, in part because it is easier to implement these solutions within existing economic incentive structures and interfaces that define modern communication, especially social media.

Consider that one reason falsehoods flourish on social media is that these platforms exploit a human tendency to amplify moral outrage. Further, the algorithms underlying social media platforms can homogenize the information and people we see as they learn our preferences and present content that aligns with (and reinforces) them. Given that social media’s economic success hinges, at least partially, on these decisions (and others) to leverage the same motivational states that can exacerbate misinformation, it seems unlikely that Silicon Valley will gravitate toward motivation-based solutions. For example, platforms seem unlikely to adjust their algorithms to highlight heterogeneity in our social networks that might encourage accountability for our beliefs, or to eliminate "like" functions in an effort to temper moral grandstanding. Indeed, as others have said: “it seems that [Facebook’s] strategy has never been to manage the problem of dangerous content, but rather to manage the public’s perception of the problem.”

We also know that the algorithms that enable social media advertisers to “micro target” their messaging, to those most amenable to persuasion, can also be exploited by purveyors of misinformation. In theory, then, it seems that these same tools could be used to tailor the delivery of corrective interventions in a manner that accounts for individuals’ differing motivational states—i.e., perhaps we could use this technology “for good.” However, this approach would first require those who design and create correctives to recognize that “one-size-fits-all” messaging about “the facts” will not work.

To this point, mitigation strategies to curb the spread of misinformation could choose to present information in ways that are less likely to trigger a motivated rejection of facts and a possible gravitation toward falsehoods. In the case of COVID-19, for example, risk communication research shows that people are more likely to accept the severity of the pandemic and to enact protective behaviors if the information they receive is tailored to their values and personal life experiences, rather than highlighting cold statistics about death rates, which can trigger counterproductive fear responses. Correctives to misinformation, therefore, should follow this same logic, packaging “the facts” in a way that resonates with audiences’ pre-existing values and experiences of day-to-day life.

Given that falsehoods about something like COVID-19 can be a matter of life or death, we should use every tool we can, as effectively as possible, to slow their spread. While fact-focused interventions can be useful at times, we should ask ourselves if seizing this low-hanging fruit will really satiate our need for effective solutions. As we have shown, a growing collection of insights from the science of science communication suggests that it will not. We would thus be well-served to reach above and beyond current solutions—looking in particular to motivational factors—even if doing so will require a significant lift.

Nicole M. Krause is a PhD student in the Life Sciences Communication department at the University of Wisconsin – Madison, where she is also a member of the Science, Media, and the Public (SCIMEP) research group. Nicole’s work focuses on how people make sense of (mis)information about scientific topics, with a particular emphasis on finding ways to facilitate productive interactions among polarized social groups on controversial topics like human gene editing or artificial intelligence.

Isabelle Freiling is a predoctoral researcher in the Department of Communication at the University of Vienna. Isabelle’s research focuses on media use and effects, as well as science and political communication, with a particular focus on social media and misinformation.

The opinions expressed in this text are solely that of the author/s and do not necessarily reflect the views of the Heinrich Böll Stiftung Tel Aviv and/or its partners.