Many experts are concerned that the curation of content on social media platforms limit our chances of encountering challenging viewpoints. In the words of Sunstein, we might live in “communication universes in which we hear only what we choose and only what comforts and pleases us.” Filter bubbles and digital echo chambers are therefore seen as one of the main causes of polarization and radicalization online.

Many experts are concerned that the curation of content on social media platforms limit our chances of encountering challenging viewpoints. In the words of Sunstein, we might live in “communication universes in which we hear only what we choose and only what comforts and pleases us.” Filter bubbles and digital echo chambers are therefore seen as one of the main causes of polarization and radicalization online. Indeed, becoming familiar with perspectives different from our own is a necessary condition for deliberative democratic public spheres. Moreover, digital echo chambers can form the breeding ground for extremism, anger and outrage. In discussing these phenomena, two things are important to understand. First, the role algorithmic curation can play in creating and amplifying bias is limited; and second, whether or not users are vulnerable to a lack of exposure to diverse content and perspectives largely depends on their own motivation and larger information environment.

Historical, Technical and Psychological Background

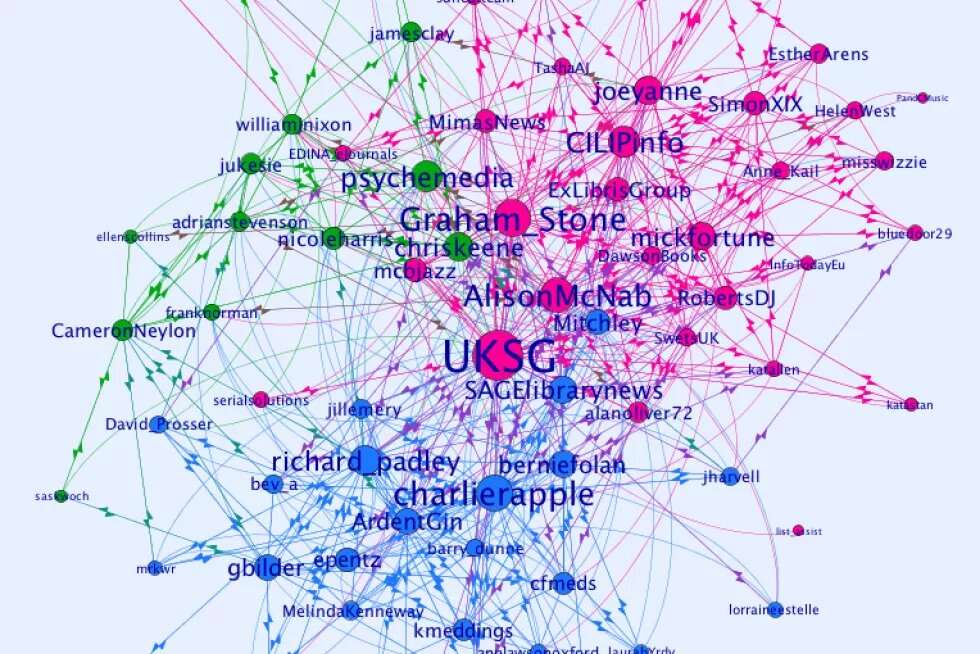

The root cause of filter bubbles and digital echo chambers resides in biased information feeds on social media. These are a consequence of the (biased) selection of the content to which users of social media platforms are exposed. To comprehend the origin of a biased selection, let us consider how the elements of an algorithmically curated newsfeed are sorted and prioritized. In general, there are two main principles that determine the newsfeed. The first is explicit personalization (also called self-selected personalization), which describes all processes in which users of the platform actively opt in and out of information, for example by following other users or selecting into groups. The second is implicit personalization (or pre-selected personalization), in which an AI-driven algorithm predicts the user preferences based on past behavior and matching the data with those of other users through collaborative filtering.

The terms “digital echo-chamber” and “filter bubbles” are often used interchangeably to describe biased news feeds, but the difference between the two concepts lies in the type of personalization that is mainly responsible for the bias. The notion of echo-chambers is linked more to mechanisms of explicit personalization, whereas the filter bubble places suggests that implicit personalization strategies are more dominant. One might liken the idea of an echo chamber created by the choice exercised by a user to follow biased accounts or become a member of a group with a clearly biased perspective (creating a biased information menu), to an individual’s choice to buy a clearly left-leaning or right-leaning newspaper. Since users themselves are the main driver , they also possess the agency to break outside the echo-chamber. In the narrative of the filter bubble, the users have a more passive role and are perceived as victims of a technology that automatically limits their exposure to information that would challenge their world view. Both concepts are based on the idea that humans generally prefer to avoid exposing themselves to information that challenges their beliefs. Being confronted with a different interpretation of the world that challenges one’s own can cause cognitive stress. To achieve this, it is presumed that individuals develop strategies to avoid exposure; this is called confirmation bias. But this bias clashes with other motivations and character traits, such as wanting to be informed or to be surprised. This means that the potential vulnerability to being locked in a digital echo chamber or filter bubble depends on how pronounced the need to avoid cognitive dissonance is in relation to other information needs.

Current developments

Empirical evidence on the phenomenon is mixed. Research has clearly demonstrated the existence of digital echo-chambers, in particular among non-mainstream groups. Yet in these cases, the role of algorithmic curation is probably limited. Several studies have demonstrated that the average person is exposed to greater diversity of opinion and perspectives when navigating the internet compared to offline news use. A related ideas is that the potential of algorithms to create biased “information universes” depends on the availability of other information sources. In many Western countries, algorithmically curated newsfeeds are only part of a much broader information menu, which also includes information on TV, such as the main news broadcasts, online news websites, newspapers and the radio. In the Global South, however, in many countries the reliance on algorithmically curated newsfeeds is much higher. Coupled with the lack of diversity in available news sources in general, this creates an information environment in which users are more likely to be trapped in digital echo chambers or filter bubbles.

Main Actors

The main actors involved are social media platforms actors that have insight into how to manipulate the news feed in their favor. and users of social media platforms. Social media platforms are incentivized to optimize engagement of users with their platforms, because as for-profit organizations they earn their incomes by monetizing attention to ads they display on the newsfeed. Hence, the content and the user interface are designed to keep users on the platform, including by providing content that is relevant to the user and avoiding content that might drive the user away from the platform such as content that is perceived to challenge users’ values or ideology. Many actors and organizations have learned to create content that will raise engagement and therefore has a higher chance to be displayed on newsfeeds. This includes content that causes rage or is highly biased or polarizing. However, the audience for such content is limited. Many users do not engage with political content at all on social media platforms or see it as an opportunity to broaden their horizon.

Recent Developments

All in all, it seems that there is a mismatch between the strong theoretical assumptions regarding filter bubbles and echo-chambers and the observed consequences on the public sphere at the moment. There is no harm in people seeking out information that confirms their attitudes and beliefs, they do so online and offline. If anything, the reality of social media and algorithmic filter systems today seems to be that they inject diversity rather than reduce it, with the exception of highly polarized or radicalized groups that seek out online communities to connect with like-minded users on a global level. With this realization, the attention in research in public discourse has shifted from viewing filter bubbles and digital echo chambers as a problem that affects all users in the same way, to understanding how the underlying principles create and amplify fringe groups that hold extreme or radical attitudes. In particular, there is a growing interest in understanding how the functionalities of implicit personalization can be abused to amplify content from within these groups to reach a wider audience.

Future Developments

Social media platforms are taking several steps to minimize what they call “coordinated inauthentic behavior.” These are actors that use bots or trolls, for example, to manipulate the prioritization algorithm on social media platforms to make biased or polarizing content more visible. As social media platforms take more control over the content that is available and recommended, many users who were looking for engagement with strongly biased or polarizing content are moving to specialized platforms. Thus, the online communities that form themselves around highly politicized topics or viewpoints turn more and more from filter bubbles into echo chambers. The choice to be part of a highly biased information universe is increasingly entirely up to the end user.

At the same time, many news organizations are working towards developing diverse news recommenders that allow users to find challenging, but relevant information. Going forward, they aim develop recommender systems that translate the editorial mission of news organizations into algorithmic news recommendations. We are likely to see an increase in content that is hand-picked and curated by human journalists. Providing alternatives to manage the information overflow online allows users with an alternative to burst their filter bubbles, to choose and manage which filter bubble they want to be a part of and to adjust the walls of these bubbles to be less translucent, enough to see beyond and expand the limits of their current view.

Further reading

- Benkler, Y., Faris, R., & Roberts, H.. Network propaganda: Manipulation, Disinformation, and Radicalization in American Politics. Oxford University Press, 2018.

- Bruns, A. Are Filter Bubbles Real? John Wiley & Sons, (2019)

- Helberger, N. “On the Democratic Role of News Recommenders.” Digital Journalism, 7(8), 993-1012, 2019.

- Jackson, S. J., Bailey, M., & Welles, B. F. # HashtagActivism: Networks of Race and Gender Justice. MIT Press, 2020.

- Pariser, E. The Filter Bubble: How the New Personalized Web is Changing What We Read and How We Think. Penguin, 2011.

- Sunstein, C., & Sunstein, C. R. # Republic. Princeton University Press, 2018.

- Zuiderveen Borgesius, F., Trilling, D., Möller, J., Bodó, B., De Vreese, C. H., & Helberger, N. (2016). “Should we worry about filter bubbles?” Internet Policy Review. Journal on Internet Regulation, 5(1).

The opinions expressed in this text are solely that of the author/s and do not necessarily reflect the views of the Heinrich Böll Stiftung Tel Aviv and/or its partners.